Provable Exploration in Bandit Problems: From Optimality to Efficiency

Lunch will be served at 11:45 AM.

ABSTRACT

A bandit problem is a succinct mathematical formulation of sequential decision-making in an uncertain environment, where an agent learns to maximize its expected cumulative reward by repeatedly interacting with an unknown environment. At the core of any bandit algorithm lies the dilemma between exploiting the good actions it has learned in the past to obtain high rewards and exploring uncertain actions for making better decisions in the future. In this talk, I will cover main exploration strategies including Upper Confidence Bound (UCB) and Thompson Sampling (TS) for solving stochastic bandits (the reward is sampled from an unknown stationary distribution) and contextual bandits (the reward is approximated by a function of some context information). I will first show that carefully designed algorithms using UCB and TS can achieve the optimal worst-case regret bound. Then I will show how these strategies are extended to contextual bandits where side information (contexts) of actions are available. Specifically, I will present recent works on the efficient exploration of contextual bandits where the reward is modeled by nonlinear functions such as deep neural networks, demonstrating that decoupling representation and exploration, and approximate sampling are efficient exploration strategies in large-scale and high-dimensional bandit problems.

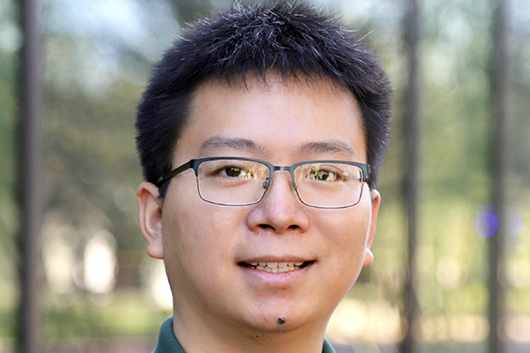

SPEAKER BIO

Pan Xu is an assistant professor in the Department of Biostatistics and Bioinformatics at Duke University. He mainly works on Machine Learning, which spans the areas of Artificial Intelligence, Data Science, Optimization, Reinforcement Learning, and High Dimensional Statistics. His research goal is to develop computationally- and data-efficient machine learning algorithms with both strong empirical performance and theoretical guarantees.